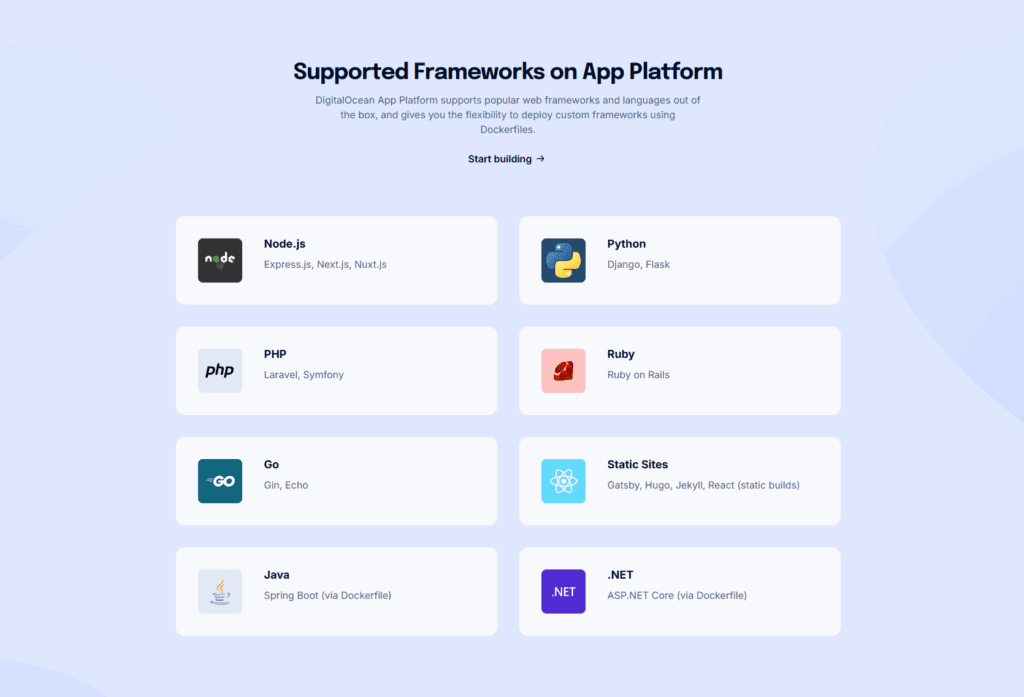

Can you run Next.js with SSR wholly under DigitalOcean App Platform? The answer is yes! App Platform gives you a managed Node.js runtime, buildpacks or Docker builds, HTTPS by default, scaling, logs, and environment variable management. From Next.js’s point of view, it’s a regular self-hosted Node server, so next start (or a “standalone” server entry) works and you retain SSR, Route Handlers, middleware, API routes, and Incremental Static Regeneration (ISR).

In this in-depth explainer, you’ll wire it up end-to-end, see both buildpack and Docker flows, understand caching and the ephemeral filesystem, and pick up practical run command patterns that match modern Next.js (15.x at the time of writing).

If you want to follow along, make sure you have a DigitalOcean account ready and also a GitHub repo with a demo application, or a Docker container that you can pull.

What “wholly under App Platform” actually means

When you deploy a Next.js app as a “Web Service” in App Platform, DigitalOcean builds your code either with Cloud Native Buildpacks (zero-Docker) or from your Dockerfile and then runs your Node server behind their managed HTTPS load balancer. You don’t need a Droplet, Nginx, or PM2. The service process is just your Node runtime starting Next.js in production mode, and the platform handles TLS, routing, scaling, and rollouts.

If your app relies on files written at runtime (for example, image uploads or SQLite databases), treat the container’s disk as temporary. Use Spaces (S3-compatible), Postgres, MySQL, Redis, or an external service for persistence. This is a standard PaaS constraint and it matters for ISR caches too; we’ll walk through it below.

The high-level deployment map

From a developer’s perspective, the minimal flow is straightforward. You push your Next.js repo, App Platform installs dependencies, runs your build command, and then executes your run command to boot the production server. You can set both commands in the UI or in an app spec file. The Node version is inferred from your package.json engines or pinned by buildpacks; keep it current for best performance and compatibility with Next.js 15.x.

The rest of this article expands that minimal flow with exact commands, variants for “standalone” output, how to pass environment variables, and what to expect from caching, ISR, middleware, and websockets.

A clean buildpack setup for SSR

If you want the simplest path with zero Docker, use buildpacks. Your package.json should have explicit build and start scripts that match what you run locally in production.

{

"name": "my-next-app",

"private": true,

"engines": {

"node": ">=20 <=22.x"

},

"scripts": {

"dev": "next dev",

"build": "next build",

"start": "next start -p ${PORT:-3000}"

},

"dependencies": {

"next": "15.5.0",

"react": "19.0.0-rc-2b0000000-20250801",

"react-dom": "19.0.0-rc-2b0000000-20250801"

}

}On App Platform, set your Build Command to npm run build and your Run Command to npm run start. The platform injects PORT, so binding to process.env.PORT is crucial when you override the default port in Next.js.

This flow gives you the standard Next.js production server, which supports SSR, Route Handlers (app/api or pages/api), middleware, and ISR.

“Standalone” output and the alternative run command

Next.js can emit a compact, production-ready “standalone” server bundle that includes only the traced files your app needs. This reduces cold start times and avoids shipping a full node_modules to production.

Enable it in your Next config:

// next.config.js or next.config.mjs

const nextConfig = {

output: 'standalone'

};

module.exports = nextConfig;After next build, you’ll have .next/standalone/ with a server entry. In that mode, you typically run the server directly with Node:

node .next/standalone/server.jsIf you adopt this, set the Run Command to node .next/standalone/server.js instead of next start. Your Build Command remains npm run build. Both modes deliver full SSR; the standalone mode just optimizes your server image and startup.

An app spec you can copy, understand, and evolve

If you prefer to keep infra as code, define your App Platform component in an app spec file and apply it with doctl or via the UI. Here’s a focused spec that deploys a single Next.js web service using buildpacks and the regular Next.js server. It maps the commands and environment clearly so you can extend it later.

# .do/app.yaml

name: my-next-app

services:

- name: web

http_port: 3000

instance_count: 1

instance_size_slug: basic-xxs

source_dir: /

git:

repo_clone_url: https://github.com/your-org/your-repo.git

branch: main

deploy_on_push: true

build_command: "npm ci && npm run build"

run_command: "npm run start"

envs:

- key: NODE_ENV

value: production

scope: RUN_TIME

- key: NEXT_PUBLIC_API_BASE_URL

value: https://api.example.com

scope: RUN_TIME

- key: NEXT_RUNTIME_STATIC

value: "false"

scope: BUILD_TIMEYou can switch to the standalone server by changing run_command to node .next/standalone/server.js and keeping the rest the same.

A Dockerfile that’s production-grade and small

When you need deterministic builds, private OS packages, or multi-stage caching, use Docker. This example emits a small final image and supports both classic next start and output: 'standalone'. It installs only production dependencies and uses a non-root user.

# Stage 1: deps

FROM node:lts-alpine AS deps

WORKDIR /app

COPY package.json package-lock.json* ./

RUN npm ci --include=dev

# Stage 2: build

FROM node:lts-alpine AS build

WORKDIR /app

COPY --from=deps /app/node_modules ./node_modules

COPY . .

# For standalone builds, ensure next.config output: 'standalone'

RUN npm run build

# Stage 3: runtime

FROM node:lts-alpine AS runtime

WORKDIR /app

ENV NODE_ENV=production

ENV PORT=3000

# If using standalone, copy the traced output; otherwise copy .next and node_modules

# Standalone:

COPY --from=build /app/.next/standalone ./

COPY --from=build /app/public ./public

# Classic server (uncomment this block and comment the standalone block if you prefer):

# COPY --from=build /app/.next ./.next

# COPY --from=build /app/node_modules ./node_modules

# COPY --from=build /app/public ./public

# COPY --from=build /app/package.json ./package.json

# Non-root user for security

RUN addgroup -S nextjs && adduser -S nextjs -G nextjs

USER nextjs

# Standalone server entry:

CMD ["node", "server.js"]

# For classic server instead, use:

# CMD ["npm", "run", "start"]In your app spec, set dockerfile_path: .do/Dockerfile (or wherever it lives) and omit the build/run commands; App Platform will use your container’s CMD.

Confirming SSR with an explicit Route Handler

To ensure you’re using server rendering, create a server-only Route Handler and a server component that fetches it on each request. This is intentionally simple and self-contained.

// app/api/time/route.ts

import { NextResponse } from 'next/server';

export const dynamic = 'force-dynamic'; // always render on the server

export async function GET() {

return NextResponse.json({ now: new Date().toISOString() });

}// app/page.tsx

export const dynamic = 'force-dynamic';

export default async function Home() {

const res = await fetch(`${process.env.NEXT_PUBLIC_BASE_URL}/api/time`, { cache: 'no-store' });

const { now } = await res.json();

return (

<main style={{ padding: 24 }}>

<h1>SSR is live</h1>

<p>Server time: {now}</p>

</main>

);

}On App Platform, set NEXT_PUBLIC_BASE_URL to your live URL. If you refresh the page repeatedly, the timestamp changes and you know SSR and server handlers are working behind the platform’s proxy.

ISR and the ephemeral filesystem

By default, Next.js stores the shared cache for generated static pages, responses, and other build outputs on the server’s filesystem when you self-host. App Platform containers are ephemeral: they can be replaced during deploys or scaling. That means any on-disk cache may be lost when the container is recreated.

You still get ISR benefits while a container is running, but don’t rely on that cache surviving a redeploy. If you need cross-instance or cross-deploy persistence, plug in a remote cache or push revalidation through an API that can recompute quickly. A pragmatic pattern is to use on-demand revalidation endpoints and a backing database or store for the canonical data.

Here’s a minimal revalidation handler that you can call after publishing content:

// app/api/revalidate/route.ts

import { NextResponse } from 'next/server';

import { revalidatePath } from 'next/cache';

export async function POST(req: Request) {

const { path, secret } = await req.json();

if (secret !== process.env.REVALIDATE_SECRET) {

return NextResponse.json({ ok: false }, { status: 401 });

}

revalidatePath(path);

return NextResponse.json({ ok: true, revalidated: path });

}Set REVALIDATE_SECRET in App Platform. When your CMS webhook fires, it can POST to this endpoint to refresh paths on demand, even across new containers.

Middleware, headers, and edge-like logic

Next.js middleware runs within the Node server in self-hosting scenarios. App Platform routes all requests over HTTPS, so you can rely on wss:// for websockets and read X-Forwarded-Proto/X-Forwarded-For for original scheme/IP if you need them. A concise middleware.ts that enforces HTTPS and sets headers looks like this:

// middleware.ts

import { NextResponse } from 'next/server';

import type { NextRequest } from 'next/server';

export function middleware(req: NextRequest) {

const res = NextResponse.next();

// Example: security headers

res.headers.set('X-Frame-Options', 'SAMEORIGIN');

res.headers.set('X-Content-Type-Options', 'nosniff');

return res;

}

export const config = {

matcher: ['/((?!_next/static|_next/image|favicon.ico).*)']

};Deploy and you can verify headers on any page. Because this runs in your Node process, there’s nothing special to enable in App Platform.

WebSockets on App Platform with Next.js

If you expose a websocket server from your Next.js Node process, connect with wss:// from the browser because App Platform terminates TLS at the edge. A small websocket server can live alongside Next.js:

// server.ts (only if you run a custom server entry)

import http from 'http';

import next from 'next';

import { WebSocketServer } from 'ws';

const dev = process.env.NODE_ENV !== 'production';

const app = next({ dev });

const handle = app.getRequestHandler();

const port = Number(process.env.PORT || 3000);

app.prepare().then(() => {

const server = http.createServer((req, res) => handle(req, res));

const wss = new WebSocketServer({ server });

wss.on('connection', (ws) => {

ws.send(JSON.stringify({ hello: 'from server' }));

});

server.listen(port, () => {

console.log(`Ready on http://localhost:${port}`);

});

});In Docker or standalone mode you’d CMD ["node","server.js"] and your client connects to wss://YOUR_APP_DOMAIN/ws (or wherever you attach it). For simple apps, the built-in Next.js server is enough; switch to a custom entry like this only when you need fine control.

Environment variables, build-time vs run-time

App Platform distinguishes variables available during the build and those present at runtime. Next.js reads .env* files locally, but in production you should define variables in the platform UI or in the app spec and mark sensitive values as encrypted. If a value must be available to the bundler (for example, feature flags that change what’s compiled), mark it as build-time; otherwise scope it to runtime. Public variables that the browser must see should be prefixed with NEXT_PUBLIC_ and scoped to runtime.

In the app spec example above, NEXT_PUBLIC_API_BASE_URL is a runtime variable. A build-time variable might flip an experiment or include a static asset path during bundling.

Node versions and Next.js 15.x

Next.js 15.x supports modern Node LTS lines. App Platform’s Node buildpack tracks current LTS by default and you can pin an exact range using the engines.node field in package.json. In production, aim for Node 20 or 22 LTS for best alignment with Next.js releases, React 19 support, and contemporary middleware features. If you see mismatches, the fix is usually to declare engines and rebuild.

Scaling, health, and zero-downtime deploys

App Platform can horizontally scale your web service to multiple instances. Because instances are stateless, never store session state on disk or memory that you expect to survive restarts or scale-out. Use cookies with signed JWTs, Redis, or your database. Deploys are rolling and you can watch the Deployments tab to see the build and live cutover; failed builds won’t replace the current healthy version.

Troubleshooting the “runs locally, 404 in prod” class of errors

If your app reaches the platform but something is off in production, check these first. Ensure that your Run Command actually binds the injected PORT, otherwise the health check fails and the app restarts. Confirm environment variables are in the right scope for build or runtime. If you switched to output: 'standalone', make sure you’re using node .next/standalone/server.js and that any files outside the project root that you need were included via experimental.outputFileTracingIncludes or by copying them in your Dockerfile.

For ISR surprises after redeploy, remember that the shared cache on disk is not persistent. Warm critical pages on deploy, or trigger on-demand revalidation from your CMS, to smooth over cold starts.

A minimal “doctl” workflow you can repeat

If you prefer command-line deployments with a spec, this small script shows how to create and then update the app consistently. You can paste this into your CI with environment variables for the app ID and paths.

# First-time: create the app from a spec file

doctl apps create --spec .do/app.yaml --format ID,DefaultIngress,Created

# Subsequent updates: apply changes to the spec

APP_ID="your-app-id"

doctl apps update "$APP_ID" --spec .do/app.yaml --format ID,DefaultIngress,UpdatedBecause your run/build commands and env are in the spec, your deploys are reproducible across environments.

Putting it all together

If you use buildpacks, set npm run build and npm run start, keep Node pinned to a current LTS, and rely on the platform to pass PORT and provide TLS. If you want a smaller server footprint, flip output: 'standalone' and run node .next/standalone/server.js instead. Treat the filesystem as temporary, especially for ISR cache and uploads. Route Handlers, middleware, websockets, and API routes all work because you’re running a full Next.js Node server. With that foundation, your app is “wholly under App Platform,” no separate Droplet or reverse proxy required.