They say a voice tells more than words ever could. But what if the voice is synthetic? In recent months, a handful of AI-powered podcasts have begun making serious noise — not as curiosities, but as functioning shows reaching real audiences. Their stories illustrate how this technology is no longer fantasy, but a medium that’s altering who can speak, how, and why.

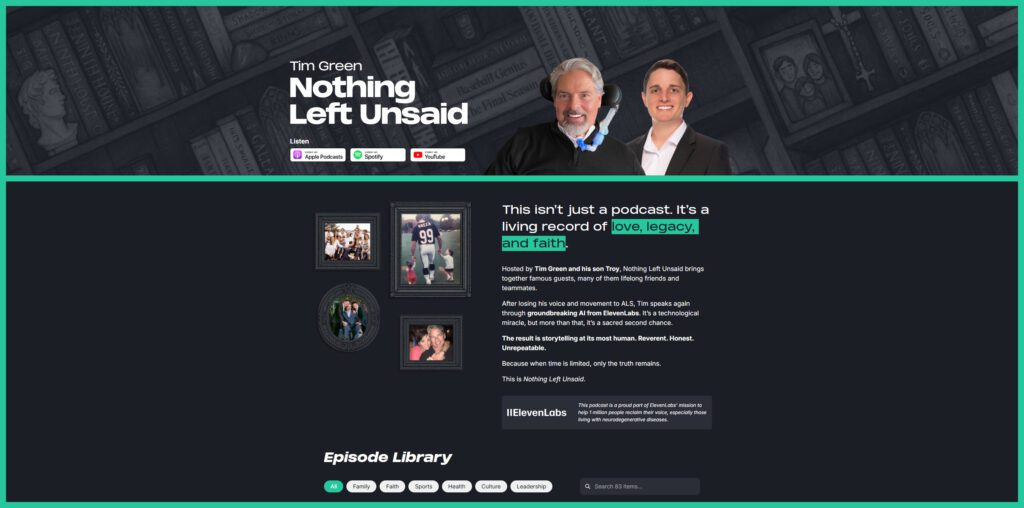

One of the most striking use cases comes not from media giants, but from endurance and personal need. Former NFL star and broadcaster Tim Green, diagnosed with ALS (a disease that robs patients of muscular control), now hosts his podcast Nothing Left Unsaid using an AI-generated version of his voice. His son Troy helped convert Tim’s vocal samples into synthetic speech so he could continue recording.

The AI voice carries his tone, accent, and cadence — even when his body no longer can.

This example shows something deeper than tech: a lifeline. Listeners hear “him,” even when he can’t physically speak.

In medicine, researchers have begun converting academic work into audio summaries using AI. A European cardiovascular nursing journal collaborated with technologists to produce AI-generated podcasts summarizing ten scientific papers. Each episode translated dense medical findings into digestible ~12-minute audio formats. The authors said listeners responded as though they were hearing a human summarizing — a sign the synthetic voices can carry authority when done well.

What was once a painstaking editorial process (expert reads, narration, editing) now gets done in an afternoon. The health research world sees this as a chance to widen reach — doctors, patients, even non-specialists can access insights in audio form.

Not only are individuals using AI voices — media creators are experimenting too. The audio firm JAR Audio ran a bold experiment: they built one podcast made entirely by human creators, and another with minimal human oversight, relying heavily on AI tools (for scripting, voice, and editing). The goal was to compare results and learn where AI stumbles — timing, inflection, emotion — and where it already shines.

The results were illuminating. In some segments, listeners couldn’t immediately tell which episode was AI-driven. In others, when phrasing grew complex or emotional, the synthetic version sounded flat. The human version still had subtle inflections that AI struggles to replicate.

One show pushing boundaries is The Unreal Podcast, billed as 100% AI generated. Every voice, every guest, every line is produced by AI — no human hosts or interventions. It’s a provocative showcase: is this art? A gimmick? A signal of what’s coming?

While its audience is niche, Unreal demonstrates how far the tech has come — and how much farther it needs to go.

Artists and marketers are also testing AI audio as a promotional tool. One case study by Jellypod tells of a veteran DJ named Stephen, who used AI-generated podcasts to spotlight his artists and releases. By automating parts of podcast production, he could push content more frequently, reaching listeners in new ways.

These aren’t just experiments — they signal shifts in access, identity, and media economics.

- Access: People who lack the tools, funding, or even ability to speak can now produce voice content.

- Scaling content: Researchers and creatives can generate multiple episodes quickly, across languages or variants.

- Quality threshold: As voices get more convincing, the bar for detection — and for authenticity — rises.

- Ethical and legal tension: The more AI mimics human voices, the more it invites questions: whose voice is this? Did it require permission? One recent case had a podcast mimic George Carlin via AI, and Carlin’s estate successfully sued to have the content removed.

So far, the most compelling AI podcast stories have real humans behind them — people adapting, experimenting, pushing boundaries. That makes the technology feel less like a threat, and more like a tool with potential.

Patterns behind successful AI podcasts

When you zoom out, the most striking thing is that the winning examples aren’t really about the tech. They’re about how people use it to solve problems that used to feel impossible.

- 1. They start with a clear human purpose

Tim Green’s ALS diagnosis gave him no way to physically speak into a microphone. AI wasn’t a gimmick here, it was a lifeline. The European research journals weren’t trying to show off synthetic voices; they were trying to get life-saving knowledge out faster. Whenever the tech is deployed as a shortcut to a goal that already matters — accessibility, education, reach — it gains traction. - 2. They lean into efficiency, not just novelty

JAR Audio’s side-by-side experiment revealed what most professionals already know: AI isn’t perfect at emotion. But it is remarkably fast at tasks like scripting, cutting down raw editing, and generating first drafts. That’s why successful projects tend to mix human creativity with AI speed, using the machine to clear bottlenecks while leaving humans to polish and guide tone. - 3. They make transparency a choice, not an afterthought

The Unreal Podcast proudly bills itself as 100% AI-driven. Tim Green openly explains why his voice is synthetic. That honesty creates trust, even curiosity. By contrast, when shows have tried to “pass” as fully human, backlash tends to follow. Listener studies suggest people will tolerate or even enjoy AI voices, but only if they know what they’re hearing. - 4. They ride on existing audiences

Bartlett’s 100 CEOs experiment worked because he already had a brand and a loyal audience. The Jellypod case worked because Stephen was already a DJ with content and fans. In both, AI didn’t create the audience — it helped feed it more content, faster. - 5. They embrace imperfection while learning fast

These pioneers know the voices can still sound stiff, the scripts sometimes awkward. But they push anyway, gathering feedback and adjusting. That willingness to experiment openly is part of why they’re remembered as early movers.

If you’re considering your own AI-assisted podcast, the playbook is becoming clearer. Start with content that matters to you, something where speed or access makes a difference. Use AI to draft and generate, but don’t skip the human review. Decide up front how transparent you’ll be with listeners. And most importantly, focus on why the podcast should exist at all. AI can take the grind out of production, but it can’t supply purpose.

The industry is still figuring out its norms. Listener habits, legal frameworks, and monetization models are all in flux. But the early wins show that when the tech is pointed at genuine needs, the results are not only convincing, they’re moving.

Where AI podcasts could realistically head in coming years

We’re not just observing experiments — we’re in a moment of inflection. The podcasting industry is already large and growing: in 2025, around 584 million people worldwide are expected to listen to podcasts, a nearly 6.8 % increase over 2024. Across the same period, podcast advertising revenue is projected to exceed USD 2.3 billion, reflecting more confidence from brands and sponsors in audio as a channel. Globally, the podcasting market is forecasted to balloon toward USD 39–40 billion by 2025, with continued fast growth through 2030.

That means the stage is big, and the stakes are high. AI podcasts — if they catch on — might help more creators claim slices of that growing pie.

From the success stories we’ve seen, several plausible trajectories emerge:

- Voice as infrastructure, not novelty

Much like how blogs moved from being a novelty to a base web presence for many, synthetic voices may become a foundational layer: creators use them almost unconsciously to deliver content. The real competition will shift from how to produce to what to say. - Democratization of niche voices

Right now, top-tier podcasts are expensive to produce. AI lowers that barrier. We could see more voices from underrepresented languages, remote regions, or low-cost verticals (specialized hobbies, hyperlocal news, community storytelling). Because Google’s new “Audio Overviews” supports over 50 languages, the technology is spreading beyond English-only use. - Hybrid models as norm

Most successful examples so far blend human curation and AI speed. Expect that pattern to become standard: humans set tone, narrative arc, and identity; AI handles the heavy lifting of drafting, voice generation, repackaging, or translations. - Raising standards, not lowering them

As synthetic voice quality improves, listener expectations will rise. Early AI podcasts might get away with quirks; soon, hiccups may feel jarring. We’ll see increasing pressure on voice models to support emotion, nuance, cross-talk, dynamic pacing, and “imperfections” that feel human. - Transparency, regulation, and rights frameworks

The more AI mimics real voices, the more legal, ethical, and perceptual questions crop up. Who owns a cloned voice? When must you disclose “this is AI”? We’ll likely see industry norms — and possibly regulation — around labeling, consent, and royalties. The Dudesy podcast case, where synthetic voice comedy specials led to legal removals, foreshadows these tensions. - New monetization models

Lower production cost might shift monetization further toward micro-episodes, paywalls for unique content, dynamically generated ads (i.e., ads read by synthetic voices), tiered personalization (listeners pay for “voice style X”), or subscription bundles. The economics of podcasting could shift from “big hit, big ad” to “many small, steady voices.” - Convergence with interactive audio

AI voice + conversational models opens the door to interactive podcasts, where listeners can participate, ask questions, branch the narrative, or listen in different voices. We may move from passive audio to responsive audio.

All this suggests one clear proposition: AI podcasts are not just a gimmick — they’re a signal. The examples we see now are early, but they reveal what’s possible: voice as a fluid medium, storytellers as engineers, and more people participating in audio creation than ever before.

For new creators, the path is simple but urgent: find a voice you want to amplify, explore synthetic tools while staying rooted in purpose, and let the technology scale your reach. The future might not be human or AI — it could be human + AI, inseparable in our audio lives.