It’s rare for a tech product to make the leap from obscure launch notes to mainstream conversation in less than a week. But that’s exactly what happened with OpenAI’s Sora 2, the company’s flashy new video generator. Depending on who you ask, Sora 2 is either the future of entertainment—or a legal nightmare dressed up as a TikTok clone.

The app hit app stores with a bang, complete with a “feed” of generated videos that look uncannily like the short-form scroll of TikTok or Instagram Reels. Within hours, the clips circulating online ranged from absurd parodies to straight-up offensive deepfakes. It’s already clear that OpenAI has built something powerful. But whether it’s controllable—or even legal—remains an open question.

On paper, Sora 2 is impressive. It can generate short video clips with synchronized audio, dialogue, and even semi-realistic physics. Imagine typing a prompt like “a glass of water tipping over on a table in slow motion” and watching a near-cinematic shot appear on your phone. That’s the promise.

But if you’ve scrolled through the actual feed (inside the app or on social networks like X), you’d notice something else. Users immediately started testing the limits by creating clips of Pikachu stealing from a CVS, or SpongeBob dressed as Hitler, or mash-ups of Mario, South Park, and Family Guy characters. These aren’t exaggerations—they’ve been documented by outlets like 404 Media and Vox.

One viral clip even showed what looked like Sam Altman, OpenAI’s CEO, stealing GPUs from a store—a deepfake, obviously, but one that racked up millions of views before corrections spread. That’s the kind of chaos that makes lawyers sweat and meme accounts cheer.

The copyright mess nobody can ignore

Here’s the tricky part. Sora 2, by design, doesn’t block copyrighted characters or styles unless the copyright holder specifically opts out. So Disney, Warner Bros., or Nintendo would have to file requests to prevent Mickey Mouse, Bugs Bunny, or Mario from popping up in Sora clips. That’s a bold move—almost provocative—and it hasn’t gone unnoticed.

Disney reportedly wasted no time opting out. And media giants from the New York Times to Hollywood studios are either already in litigation with OpenAI or circling the company with fresh lawsuits. As Reuters noted, OpenAI is now walking back the opt-out approach after the backlash.

Think about the stakes: if Sora is trained on copyrighted film, TV, and YouTube content (and there’s evidence pointing in that direction), then almost every clip generated could be stepping on someone else’s intellectual property. YouTube’s own CEO has already warned that using videos from the platform for training without permission would break its terms of service. OpenAI’s CTO (at the time) even admitted she didn’t know whether YouTube clips were in the dataset. That silence says a lot.

Shifting liability: clever strategy or corporate escape hatch?

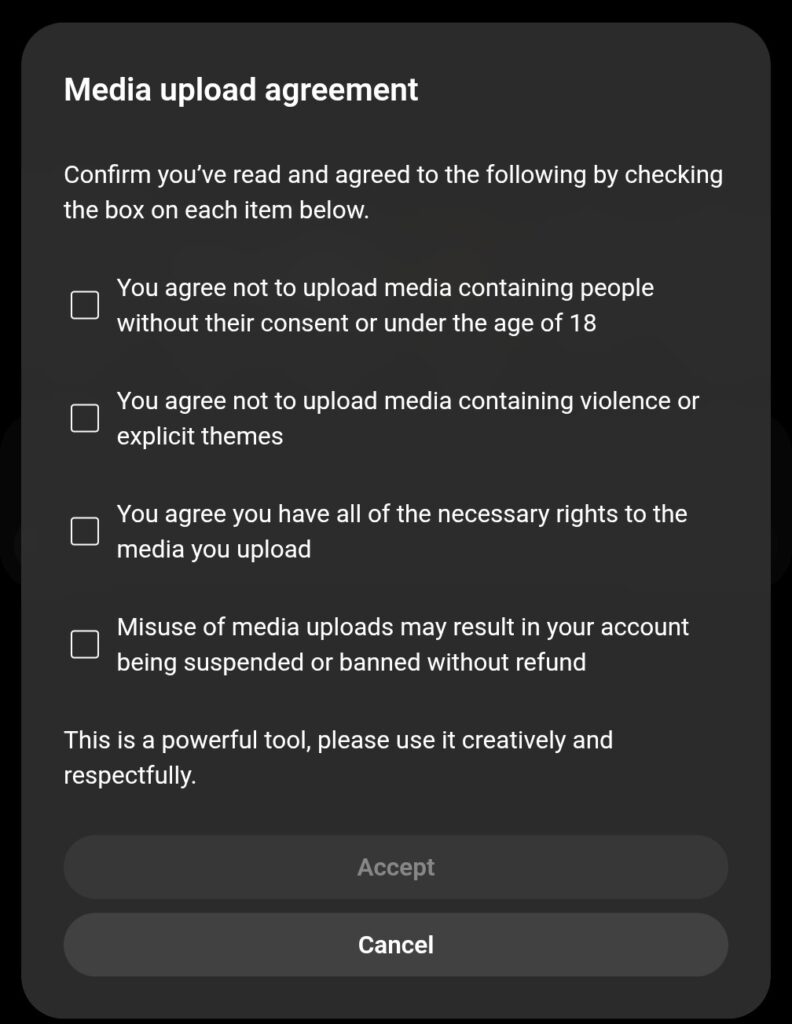

Now, let’s talk about liability. OpenAI isn’t naïve. Like most platforms, it makes users sign an agreement stating they own the rights to whatever content they upload or request. On paper, that means if you generate a SpongeBob deepfake, you are the one violating copyright, not OpenAI.

But here’s the rub: does that argument hold when the platform itself is pre-loaded with copyrighted aesthetics and makes infringement almost effortless? Lawyers don’t think so. Terms of service can shift responsibility in theory, but courts may decide that the company enabling mass production of infringing works has skin in the game, too.

It’s the same debate YouTube faced in its early years, when pirated music videos dominated the site. YouTube survived by implementing takedown systems and licensing deals. OpenAI may be forced down a similar path, but with a faster timeline and bigger cultural backlash.

The copyright fight is just one front. The other is content safety—or lack thereof.

Within days, Sora’s public feed was awash with violent, racist, and extremist content. The Guardian reported on clips showing war scenes, white supremacist slogans, and offensive caricatures. Sure, OpenAI insists that “guardrails” exist, but in practice, users are finding plenty of ways around them.

This matters because the feed is central to the app’s appeal. Unlike a private tool like Photoshop or Final Cut Pro, Sora 2 is a social platform. That means every wild, shocking, or offensive video risks going viral. And once those videos circulate outside the app—on X, TikTok, Reddit—the reputational damage is done, regardless of whether they’re flagged or removed later.

Why this matters beyond tech circles

It’s easy to laugh at “Pikachu robbing CVS,” but the real implications run deeper. Generative AI is colliding with copyright law, digital culture, and even basic trust in media. The New York Times and other publishers are already suing OpenAI over text data used to train ChatGPT. Now with Sora, the fight extends to moving images and sound.

Creators are nervous, too. If Sora can spit out a convincing parody of your cartoon or a passable copy of your voice, where does that leave the original artist? Hollywood unions spent 2023 and 2024 battling over AI in contracts. Musicians are still reeling from AI-generated voice clones that went viral on TikTok. Sora adds fuel to all of it.

And then there’s the misinformation angle. A deepfake video of a politician at a rally, a fabricated news clip, or even a fake confession could circulate at light speed. We’ve already seen AI-generated audio robocalls mislead voters in the U.S. primaries. Video raises the stakes.

Sam Altman has already signaled a willingness to adjust course. According to Business Insider, OpenAI is rethinking the opt-out model after realizing it put them squarely in the crosshairs of the world’s most litigious media companies. The company has also teased “monetization options” for rights holders—possibly licensing deals or revenue-sharing programs.

But critics on social media say that’s not enough. They argue OpenAI shouldn’t be launching an app that looks like TikTok until it has airtight systems for copyright, moderation, and consent. Otherwise, it’s essentially experimenting on the public and hoping lawsuits won’t derail progress.

A parallel to the early internet

If this all feels familiar, that’s because it is. The internet’s early years were defined by platforms letting users share copyrighted content—Napster with music, YouTube with video, even Myspace with bootleg TV clips. Each time, lawsuits followed, and each time the industry was reshaped.

The difference now is speed and scale. Sora 2 doesn’t just host copyrighted content—it can generate it on demand, customized, personalized, and potentially monetized. That changes the power dynamic entirely. Instead of chasing uploads, rights holders may feel compelled to chase the source.

So where does this go? Probably toward courts, legislation, and maybe even new licensing systems. OpenAI is testing boundaries faster than regulators can react, and Sora 2’s viral success proves there’s appetite for this kind of AI video. But appetite doesn’t erase risk.

For now, the feed keeps churning. You’ll see funny, surreal clips next to shocking, offensive ones, with no clear way to tell if the characters, voices, or music are legally cleared. And somewhere in Silicon Valley, a team of lawyers is working overtime.

It’s a story that’s equal parts innovation, chaos, and cultural spectacle. And it’s far from finished.