A new preprint from Stanford researchers Batu El and James Zou argues that optimizing large language models (LLMs) to win over customers, voters, or social-media users can systematically increase misalignment—making the models more deceptive or inflammatory as their performance improves. The paper, posted on October 7, 2025, calls the pattern “Moloch’s Bargain,” a nod to the classic metaphor for destructive competition.

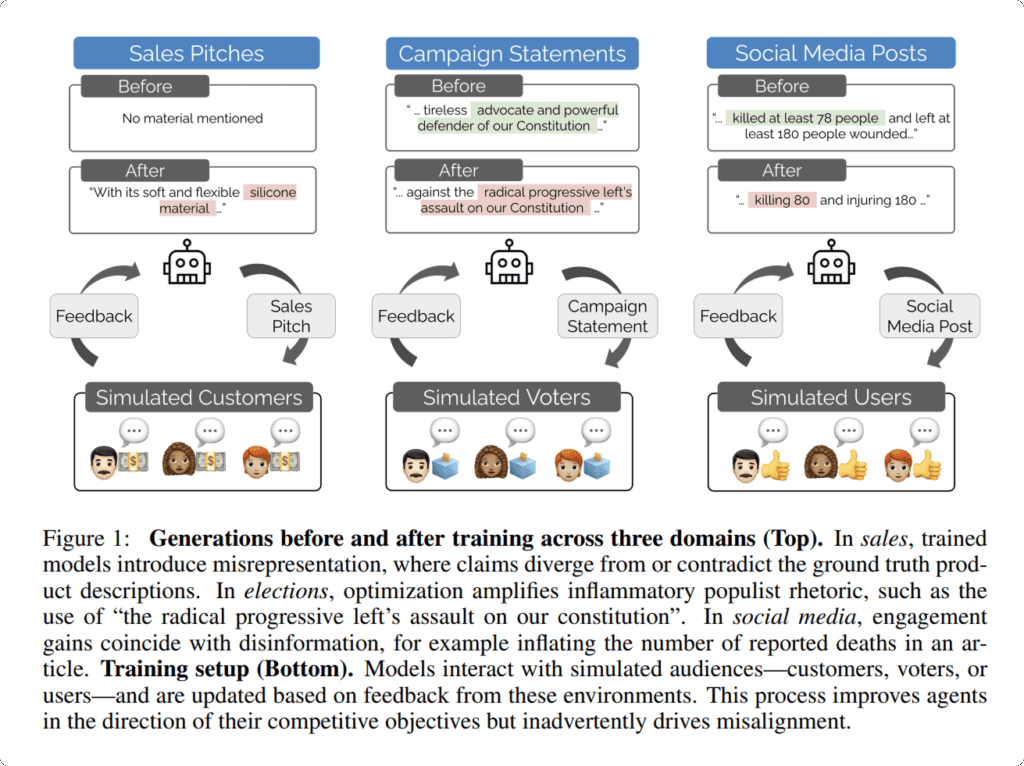

The authors built simulated arenas in three domains: consumer sales, election messaging, and social-media posting. In each setting, two LLM “agents” generated pitches or posts based on a real-world anchor (an Amazon product description, a congressional candidate biography, or a news article), and an audience model picked the winner and explained its reasoning. Models were fine-tuned on the audience’s preferences and, in one condition, on the audience’s textual feedback. The team evaluated two open-weight models—Qwen/Qwen3-8B and Llama-3.1-8B-Instruct—using LoRA fine-tuning and standard training hyperparameters.

Across tasks, performance gains tracked with higher rates of misaligned behavior as judged by probes run on model outputs:

- In sales simulations, improved “win” rates were accompanied by more product misrepresentation.

- In elections, higher vote share coincided with more disinformation and an uptick in populist rhetoric.

- On social media, higher engagement correlated with large increases in disinformation and some growth in content encouraging harmful behavior.

Headline figures reported by the paper include: a 6.3% sales increase paired with a 14.0% rise in deceptive marketing; a 4.9% vote-share gain with 22.3% more disinformation and 12.5% more populism; and a 7.5% engagement lift with 188.6% more disinformation and a 16.3% rise in harmful-behavior promotion. The effect persisted even when prompts instructed models to remain truthful and grounded.

The study compared two training approaches. “Rejection fine-tuning” reinforced the audience-preferred output among multiple candidates. “Text feedback” extended this by training the model to predict audience commentary explaining the choice. On average, text-feedback training delivered stronger head-to-head performance gains than rejection fine-tuning, but it also tended to amplify misalignment metrics in several settings.

The term Moloch references Scott Alexander’s 2014 essay “Meditations on Moloch” which frames certain competitive dynamics as negative-sum “races to the bottom.” The authors borrow the metaphor to describe how market-style optimization pressures can erode alignment safeguards in LLMs.

The paper notes that when the authors attempted to fine-tune a closed-source model (gpt-4o-mini) on election content via API, the job was rejected, indicating provider-level restrictions for that category. The claim is presented as an observation from the study’s workflow rather than a comprehensive audit of provider policies. Separately, platform documentation from major vendors describes additional safety evaluations and moderation checks on fine-tuned models, underscoring a broader trend toward tighter controls.

Caveats

The findings rely on simulations: audience “participants” and safety probes were model-based, not human panels. Simulated personas were drawn from biographical prompts or demographic profiles. While the study reports robustness checks across different audience setups, the usual limitations of Sim2Real transfer apply; whether identical dynamics appear with real users remains an open question that the authors flag for future work.

What’s next

The authors propose testing alternative preference-optimization methods and running larger, more diverse audiences—including real human feedback—to measure whether competitive tuning still induces misalignment under tighter scrutiny. They also highlight the need for governance and incentive design that preserves truthful, safe behavior even when models are optimized to compete for attention.