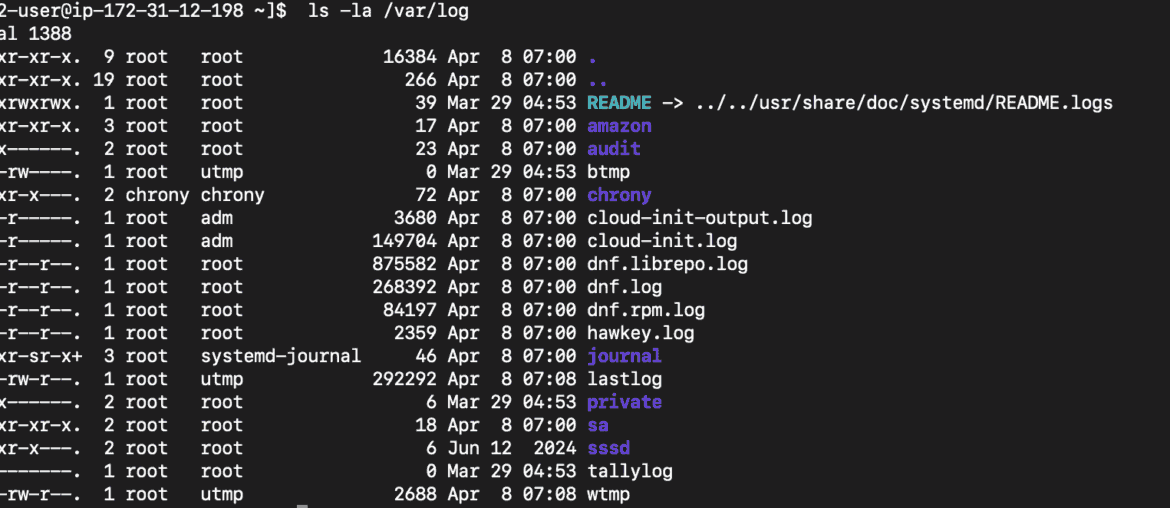

When an app misbehaves in production, the fastest clues usually live in plain-text log files. Most Linux servers—including a small Ubuntu Droplet running Nginx or a Node app—write rolling logs under /var/log/. You do not need a full observability stack to get signal quickly; a shell, tail, and grep take you surprisingly far for day-to-day triage. You will work from a terminal on a user with read access to the log files; if you are on a fresh cloud VM, make sure you can SSH in (you will need your hosting account ready—on DigitalOcean that means a Droplet and an SSH key). We will build up from a one-liner to reusable helpers, with notes on rotation, performance, and safety so you can reuse the approach across services.

Prerequisites

You need shell access to the server, a POSIX environment (Ubuntu/Debian/CentOS/Alpine all work), and the base tools tail and grep (they ship with coreutils/grep by default). Confirm basic access first and decide which log you care about (for example, /var/log/nginx/access.log or your app’s file under /var/log/myapp/). The examples assume Ubuntu paths and permissions; if the log requires elevated access, prefix commands with sudo. The goal is to stream new lines as they are written and filter for the lines that matter, then iterate toward something you can keep in your toolkit.

Follow a log in real time

tail -f streams appended lines; tail -F does the same but survives log rotation by reopening the file if it gets renamed.

# Follow Nginx access log, surviving rotations

sudo tail -F /var/log/nginx/access.logStart here to confirm the file is active and that you see fresh entries as requests arrive. If nothing appears during a test request, you may be looking at the wrong file, or buffering may be delaying writes; verifying the path now saves time later.

Filter with grep while you stream

Piping tail into grep lets you include only lines that match a pattern. Use -E for extended regular expressions, -i for case-insensitive matching, and --line-buffered to force grep to flush matches immediately (important in streaming pipelines).

# Watch for 500s and timeouts in access logs, flush matches promptly

sudo tail -F /var/log/nginx/access.log \

| grep -E --line-buffered '( 5[0-9]{2} )|timeout|upstream timed out'This pattern surfaces server errors (500–599) and common timeout messages as they occur. Immediate flushing avoids multi-second delays that can otherwise hide live issues during an incident.

Watch multiple patterns, keep context

Negative matches (exclude) and alternation (either/or) help you narrow to what matters without losing context. Start broad, then ratchet down.

# Surface app errors but drop noisy health checks

sudo tail -F /var/log/myapp/app.log \

| grep --line-buffered -E 'ERROR|Exception|Traceback' \

| grep -vE 'GET /healthz|GET /metrics'The first grep includes errors; the second removes routine probes. If you later find a recurring benign error string, add it to the exclusion to keep the stream focused.

Add timestamps when logs omit them

Many app logs include timestamps; access logs almost always do. If yours does not, you can annotate the stream with the time you saw the line. The awk snippet below prefixes each line with an ISO-8601 timestamp from the server clock so you can correlate with alerts or dashboards.

# Prepend an ISO timestamp to each streamed line

sudo tail -F /var/log/myapp/app.log \

| awk '{ "date -u +%Y-%m-%dT%H:%M:%SZ" | getline d; print d, $0; fflush() }'This does not replace accurate in-app timestamps, but it is good enough to align events across systems during a live investigation.

Color and readability in the terminal

Color helps your eyes scan for matches. grep --color=always highlights the pattern; pairing with less -R lets you scroll without losing colors. Use this when streaming is too fast and you want to pause and review.

# Highlight 500s, keep scrollback with colors preserved

sudo tail -F /var/log/nginx/error.log \

| GREP_COLOR='1;31' grep --color=always -E --line-buffered 'crit|alert|emerg|fatal| 5[0-9]{2} ' \

| less -RChoose brief, high-signal patterns; too many highlights defeat the purpose. If the stream is calm, switch back to raw streaming to reduce overhead.

Grab recent history before following

During an incident, you often need the last N lines to see what led up to the problem. tail -n prints a fixed window and then you can continue following with -F.

# Show the last 200 lines, then continue live with a filter

sudo bash -c "tail -n 200 -F /var/log/myapp/app.log" \

| grep -E --line-buffered 'ERROR|WARN|Timeout'This provides immediate context so you are not blind to the seconds or minutes before your session started. Adjust the window to log volume and retention.

Target common web patterns

Access logs encode status codes, methods, and paths; that structure makes filtering powerful. The examples assume the default Nginx format, but the approach holds for Apache or custom formats with minor edits.

# Investigate a specific client IP and 5xx responses

sudo tail -F /var/log/nginx/access.log \

| grep -E --line-buffered '203\.0\.113\.42| 5[0-9]{2} '

# Track slow requests over 1 s if $request_time is logged

sudo tail -F /var/log/nginx/access.log \

| awk '$NF+0 >= 1 { print; fflush() }'Iterate on the pattern while you observe traffic; you will quickly build queries that map to your app’s failure modes.

Survive log rotation and missing files

Most systems rotate logs via logrotate, renaming the current file and creating a new one. tail -F handles this automatically; -f does not. If your app starts writing only after it creates the file, follow a directory and let tail watch for the file to appear.

# Wait for a log file to show up and follow it thereafter

sudo tail --retry -F /var/log/myapp/app.logUse --retry sparingly; it is helpful on first deploys where the file may not exist yet, but do not leave it running unattended on the wrong path.

Extract from compressed rotated logs when needed

When you need to look back further than the active file, many systems compress older logs with gzip. Stream them with zcat/zgrep so you do not have to decompress to disk.

# Search a week of rotated, gzipped logs for a signature

sudo zgrep -hE 'OutOfMemoryError|killed process' /var/log/myapp/app.log.*.gzIf you want both current and historical coverage, run a one-off zgrep to bound the timeframe, then attach a live tail on the active file to keep watching forward.

Build a small reusable watcher

A tiny wrapper script reduces keystrokes and standardizes buffering options. Put this somewhere in your $PATH (for example, /usr/local/bin/watchlog) and make it executable (chmod +x /usr/local/bin/watchlog).

#!/usr/bin/env bash

# watchlog: follow a log with safe defaults, pattern include/exclude

# Usage: watchlog /path/to/log "include_regex" "exclude_regex_optional"

set -euo pipefail

LOG="${1:-}"

INCLUDE="${2:-.}"

EXCLUDE="${3:-}"

if [[ -z "$LOG" ]]; then

echo "Usage: watchlog /path/to/log \"include_regex\" [\"exclude_regex\"]" >&2

exit 1

fi

# Follow through rotations, force line-buffered grep, keep colors

tail -F "$LOG" \

| GREP_COLORS='ms=01;31' grep --color=always -E --line-buffered "${INCLUDE}" \

| { [[ -n "$EXCLUDE" ]] && grep -vE --line-buffered "${EXCLUDE}" || cat; }You can now type watchlog /var/log/nginx/error.log 'crit|emerg|alert' 'client closed connection' and keep a consistent display across servers.

Monitor two logs side-by-side

Incidents often involve both an upstream (e.g., Nginx) and your app. You can multiplex with tmux panes or prefix each stream so you can tell them apart in one window.

# Combine two streams with prefixes for quick correlation

stdbuf -oL -eL sudo tail -F /var/log/nginx/error.log \

| sed -u 's/^/[nginx] /' &

stdbuf -oL -eL sudo tail -F /var/log/myapp/app.log \

| sed -u 's/^/[app] /'

waitstdbuf removes stdio buffering surprises so lines appear promptly, and sed -u preserves line-by-line streaming. This is a practical middle ground when you do not want to spin up a dashboard.

Keep sessions stable during disconnects

Long-running streams die when your SSH session drops. Use tmux or screen to keep them alive and to capture scrollback for later review. If you attach a watcher in a tmux pane, you can pair it with a shell for ad-hoc commands or jump between panes while the stream continues. The same commands above work unchanged inside the session, which keeps your habits consistent.

# Start a reusable tmux session dedicated to log watching

tmux new -s logsReattach with tmux attach -t logs after reconnecting, and your streams will still be running. This simple step turns quick one-liners into durable monitors during an outage.

Performance and safety notes

Streaming text is cheap, but it is still I/O: run watchers on the server that owns the log to avoid remote latency, and do not tail gigantic historical files without constraints. If you must scan a large file, add LC_ALL=C to speed up regex evaluation and anchor your patterns to reduce backtracking. Be careful with secrets; grep will happily echo whatever it matches, so avoid copying raw logs into shared channels and prefer patterns that only surface necessary lines. If you need to hand off to a teammate, share the pattern and path, not a blob of log text, so they can reproduce the view.

# Faster fixed-string search for a known token, avoids regex engine entirely

LC_ALL=C sudo grep -F --line-buffered 'SpecificErrorCode' /var/log/myapp/app.logThis keeps load minimal and results quick when you know the exact string you need. As a rule, start with -F for literals and switch to -E only when you need regex features.

Where to go next

The approach here gets you from “something’s wrong” to “this class of requests is failing” rapidly, which is what you need in the first 10 minutes of an incident. From there, you can fold in structured logs, ship logs to a central store, or replace ad-hoc grep with queries that reflect your schema. If your systemd services log to the journal instead of files, the same ideas apply with journalctl -f and grep, and you can migrate patterns from this guide unchanged. Keep the small wrapper script, evolve its defaults as you learn your app’s failure modes, and make it the first tool you open when the pager goes off.