Supabase is open-source at its core, built around tools like PostgreSQL, and they’ve provided official guides for running it on your own infrastructure using Docker. That means you can spin it up on a DigitalOcean Droplet and manage everything yourself—no reliance on Supabase’s hosted platform. But let’s be realistic here: self-hosting isn’t as plug-and-play as their cloud service. You’ll handle scaling, security, backups, and updates on your own, and some features like seamless multi-project management or built-in SSL for custom domains might require extra work or third-party tools.

From my experience, the self-hosted version mirrors the hosted one pretty closely in core functionality, but it might lag on the very latest features, and things like realtime database limits aren’t enforced by Supabase—they’re just bounded by your server’s resources. Also, watch out for production pitfalls; the basic setup can have security gaps, like exposed ports or weak defaults, so we’ll address hardening it as we go.

If you’re coming from Supabase’s hosted plan, self-hosting frees you from things like project pausing after inactivity or usage caps, but it shifts the burden to you for reliability. For instance, email auth (like magic links) won’t work out of the box without configuring an SMTP provider, since many VPS hosts like DigitalOcean block outbound port 25 to prevent spam. And storage? You can configure it to use local volumes or integrate with something like DigitalOcean Spaces for S3-compatible object storage, avoiding any artificial file size limits beyond what your setup can handle.

Now, let’s walk through how to deploy this on DigitalOcean. I’ll assume you’re comfortable with basic command-line stuff—if not, no worries, I’ll flag where to look for more help. This is based on official Supabase docs and my own setup experience, so it should hold up over time as long as Docker remains the go-to method (which it likely will). We’ll focus on a manual Docker Compose setup on an Ubuntu Droplet, as that’s the most flexible approach. If you want something quicker, DigitalOcean has a Marketplace 1-Click App for Supabase that pre-installs it on a Droplet, but the UI for that might evolve, so check their site for current steps. There are also automated options using tools like Terraform, but we’ll stick to basics first.

Start by signing up for DigitalOcean if you haven’t already—they offer affordable Droplets starting small, but for Supabase, aim for at least 2GB RAM and 1 vCPU to handle the stack comfortably without choking on realtime features or multiple users. You can scale up later. Once logged in, create a new Droplet: Choose Ubuntu (latest LTS version for stability), pick your region (closer to users is better for latency), and add an SSH key for secure access instead of a password. After it’s provisioned, note down the public IP—you’ll SSH in with something like ssh root@your-droplet-ip.

First things first, update your system and install prerequisites. Log in via SSH, then run:

sudo apt update && sudo apt upgrade -yThis keeps everything fresh. Next, you’ll need Git and Docker. If your Droplet didn’t come with Docker pre-installed (some images do), here’s how to add it reliably. We’ll pull from Docker’s official repo to avoid outdated Ubuntu packages.

sudo apt install apt-transport-https ca-certificates curl software-properties-common -ycurl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpgecho "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/nullsudo apt update

sudo apt install docker-ce docker-ce-cli containerd.io docker-compose-plugin -yVerify it’s running with sudo systemctl status docker—you should see it’s active. If not, start it with sudo systemctl start docker. Also, add your user to the Docker group to avoid sudo every time: sudo usermod -aG docker $USER, then log out and back in.

Now, clone the Supabase repo. It’s lightweight since we’re using –depth 1 to grab just the latest commit.

git clone --depth 1 https://github.com/supabase/supabaseHead into the docker folder: cd supabase/docker. Copy the example env file to get started on config:

cp .env.example .envThis .env file is your control center—edit it with a text editor like nano (nano .env). Key things to set right away: A strong POSTGRES_PASSWORD (at least 32 characters, random), and generate API keys. For keys, head to Supabase’s docs or use a JWT generator tool—they provide a form for creating anon and service role keys based on a secret you make up. Update ANON_KEY, SERVICE_ROLE_KEY, and JWT_SECRET accordingly. Don’t skip this; defaults are insecure for anything real. Also, set API_EXTERNAL_URL to your Droplet’s IP like http://your-droplet-ip:8000 for now—we’ll fancy it up with a domain later.

If you want email auth working, configure the SMTP section. DigitalOcean blocks port 25, so use a service like SendGrid (free tier gives 100 emails/day for 60 days). Sign up, grab an API key, and fill in SMTP_HOST=smtp.sendgrid.net, SMTP_PORT=587, SMTP_USER=apikey, SMTP_PASS=your-sendgrid-key, etc. Set SMTP_ADMIN_EMAIL=your-email and enable signups if needed.

For storage, the default uses local volumes, but for production, integrate DigitalOcean Spaces. Create a Space in your DO account, get access keys, and update .env with STORAGE_BACKEND=s3, S3_ACCESS_KEY, S3_SECRET_KEY, S3_REGION (like nyc3), and S3_BUCKET. This offloads files to scalable object storage.

Pull the images and spin everything up:

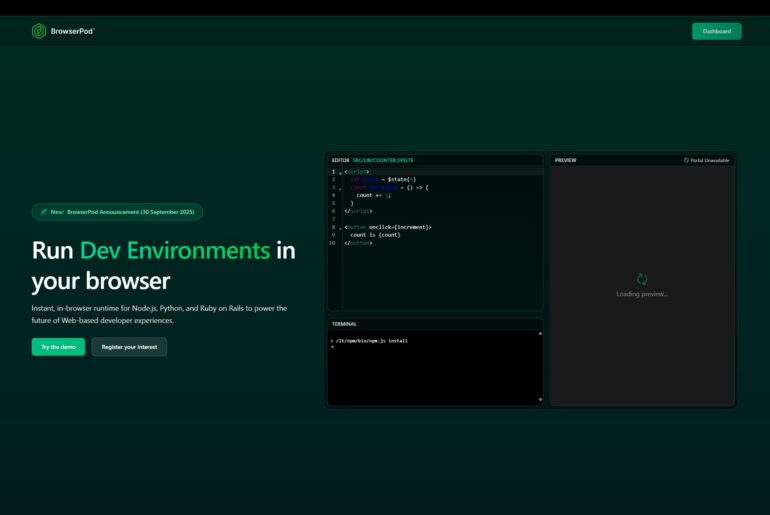

docker compose pulldocker compose up -dThe -d runs it in detached mode. Check status with docker compose ps—all services like db, auth, realtime should show healthy. If not, logs are your friend: docker compose logs -f. Access the Studio dashboard at http://your-droplet-ip:8000 (default login: supabase / this_password_is_insecure_and_should_be_updated—change it immediately via the dashboard).

At this point, you’ve got a basic self-hosted Supabase running. Test it by connecting from a client app: Your REST endpoint is http://your-droplet-ip:8000/rest/v1/, auth at /auth/v1/, etc. Use the anon key for public access and service key for admin stuff.

But remember what I said earlier about security? This setup exposes ports directly, which isn’t great for production. To harden it, add a reverse proxy with SSL. Caddy is simple for this—install it:

sudo apt install -y debian-keyring debian-archive-keyring apt-transport-httpscurl -1sLf 'https://dl.cloudsmith.io/public/caddy/stable/gpg.key' | sudo gpg --dearmor -o /usr/share/keyrings/caddy-stable-archive-keyring.gpgcurl -1sLf 'https://dl.cloudsmith.io/public/caddy/stable/debian.deb.txt' | sudo tee /etc/apt/sources.list.d/caddy-stable.listsudo apt updatesudo apt install caddyEdit /etc/caddy/Caddyfile to proxy to Supabase:

dropletdrift.com {

reverse_proxy /rest/* localhost:8000

reverse_proxy /auth/* localhost:8000

reverse_proxy /storage/* localhost:8000

reverse_proxy /realtime/* localhost:8000

reverse_proxy /* localhost:3000 # For Studio

}Point your domain’s DNS to the Droplet IP, restart Caddy (sudo systemctl restart caddy), and update .env URLs to https://yourdomain. For extra protection, add basic auth or something like Authelia in front of Studio. If you make changes, restart with docker compose down && docker compose up -d—data persists in volumes.

For backups, use DO’s built-in Droplet snapshots or tools like pg_dump for the DB. Monitor resources; if realtime chews up CPU, scale your Droplet. And if this feels overwhelming, check community repos for automation—there’s one from DigitalOcean using Terraform to provision everything, including Spaces and firewalls, which can save time once you’re comfortable. That’s the gist—self-hosting gives you control, but it’s ongoing work. Let me know if you hit snags!