How often do you ask an AI assistant a simple factual question — say, “Who discovered penicillin?” — and it answers confidently, yet wrongly? Or you upload a photo of your living room and the AI describes an armchair that doesn’t exist. These are not bugs. They are hallucinations — and by 2025 we’ve learned that they are part of the AI story, not just glitches to be wished away.

To understand where we are now, we have to go back a little in time.

The term “hallucination” in AI began gaining traction around the time large language models (LLMs) became widely used in public tools. As chatbots like ChatGPT and BlenderBot entered the mainstream, users noticed they sometimes “made things up.”

Early on, these hallucinations might have been viewed as amusing quirks. But as AI began being used in serious domains — medicine, law, business — the stakes rose. Researchers started classifying hallucinations, measuring their frequency, and designing fixes.

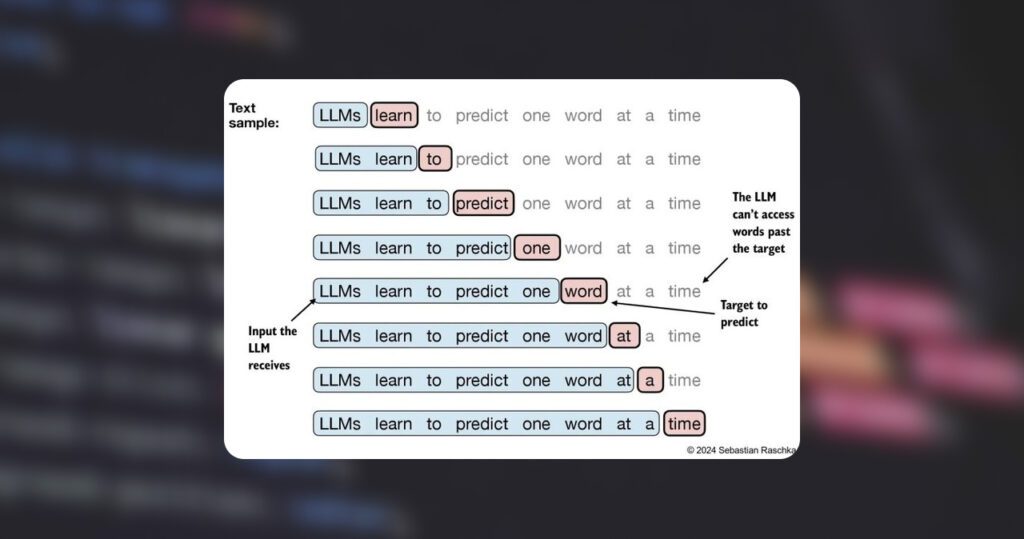

By the early 2020s, it was clear hallucinations would not vanish entirely — models don’t have a built-in “truth detector.” They generate plausible continuations of text based on statistics, not “reasoning” or understanding.

Now in 2025, we are in a phase of realism. We accept hallucination as a fundamental challenge, and researchers are pushing deeply on detection, mitigation, and system design aimed at safety, not perfect output.

What exactly is a hallucination

A hallucination is a confident but wrong (or at least ungrounded) output from an AI model. It can appear in many forms:

- A factual hallucination: the model states a false fact (e.g. “the capital of Australia is Sydney,” or a made-up statistic).

- A semantic or logical hallucination: the model’s response is internally incoherent or contradicts itself.

- A task-specific hallucination: in formats like image captioning or code generation, the model invents objects or function names that do not exist. For example, code models sometimes suggest fake software package names — a phenomenon called slopsquatting.

Researchers often divide hallucinations into intrinsic vs extrinsic:

- Intrinsic hallucinations stem from internal model inconsistency (the model contradicts itself).

- Extrinsic hallucinations are errors with respect to external reality (the model asserts something false in the world).

In vision or multimodal systems (combining image + text), hallucinations can be even more varied: describing objects that aren’t there, attributing impossible properties, or mixing elements in nonsensical ways.

As one concrete example: in 2025, researchers manipulating embeddings of an open source multimodal model (DeepSeek) were able to force targeted visual hallucinations — making the model confidently claim objects that weren’t present with very high hallucination rates.

Another recent system called Feedback-Enhanced Hallucination-Resistant Vision-Language Model dynamically checks its own outputs, suppresses low-confidence claims, and ties descriptions to verified visual detections. It reportedly reduced hallucination by about 37 percent in tests.

So hallucinations remain, but our tools to spot and handle them are advancing.

Why do hallucinations still happen?

You may wonder: with all the progress in AI, why can’t we just eliminate hallucinations? Here’s a look under the hood.

AI language models are fundamentally predictors of what comes next. Given a prompt, they sample from likely continuations. That means they are not grounded in verifying truth — they optimize for fluency and plausibility, not factual correctness.

Training datasets are large and messy. They include conflicting, outdated, or incorrect information. The model may absorb errors, biases, or hallucinations already present.

Models are also rewarded during training to produce outputs (rather than abstain). That incentivizes “guessing” rather than admitting uncertainty. OpenAI’s own 2025 work describes how standard training and evaluation setups push models toward overconfidence in their guesses.

When questions are about specialized, niche, or very recent data, the model may have weak or missing signals. It may fill gaps with plausible but incorrect content.

For multimodal systems, mapping across modalities (e.g., image to text) adds more complexity and error sources. The model may misinterpret or overinterpret visual cues.

Finally, adversarial manipulations are possible. Models have vulnerabilities: one study induced hallucinations by tweaking embeddings deliberately.

In other words, hallucinations are less a bug and more a natural consequence of current generative design.

Hallucination in the wild: how often, and where it matters

You might wonder: how common are these hallucinations nowadays? And where do they cause real harm?

Some recent research suggests even top models are hallucination-free only about 35 percent of the time in certain factual tests.

Domain reports show that hallucination rates depend heavily on topic:

- In legal and financial contexts, average hallucination rates for top models are often in the single to low double digits (e.g. 2 %–6 %) under controlled conditions.

- In medical or scientific domains, hallucination risk is higher if precision is needed.

One recent user-centric study of mobile AI apps analyzed thousands of app reviews and found that around 35% of flagged reviews indeed described hallucination-type errors as perceived by users.

A breakthrough detection method from Oxford used entropy-based uncertainty estimators to flag when a model is likely hallucinating, improving robustness of detection.

So hallucinations are common enough that they cannot be ignored.

Real consequences

In February 2025, the BBC tested major chatbots (ChatGPT, Copilot, Gemini, Perplexity) on current affairs questions. Over half the AI answers had significant distortions or outright errors — misreporting key dates, fabricating quotes, misattributing roles.

In another illustrative case, Google’s AI Overview tool in 2025 cited an April Fool’s satire article about “microscopic bees powering computers” as a factual source — its system had convincingly hallucinated it.

In code generation, slopsquatting (inventing fake library names) has become a known supply chain risk: LLMs have suggested nonexistent packages, sometimes downloaded by developers who trust the AI.

Imagine a medical diagnosis assistant giving a wrong symptom or citing a nonexistent study — that’s a very real risk. In fact, researchers have developed a Reference Hallucination Score (RHS) to quantify how often chatbots invent citations in medical contexts.

Thus, hallucination is not just theoretical — it affects trust, credibility, safety, and in some cases real lives.

Where are we in 2025 — and where might things go?

The status in 2025 is one of guarded optimism. Hallucinations are still with us, but our ability to detect, control, and mitigate them is advancing quickly.

OpenAI’s 2025 research puts spotlight on improvements in training and evaluation to make AI more reliable.

New models (e.g. GPT-5) are reported to hallucinate less than prior versions, although they still do so. Some experts argue that entirely eliminating hallucinations may be impossible under current generative paradigms.

Stride is being made in uncertainty estimation and self-awareness: models are being enhanced to detect when they don’t “know” something, and they can decline to answer or flag low confidence.

Hybrid neuro-symbolic systems are also gaining traction: combining neural models’ fluency with rule-based or symbolic systems’ reliability may reduce hallucination in critical domains.

Agentic systems — using multiple specialized sub-agents that critique and refine each other’s outputs — have shown promise in reducing hallucination in experiments published in 2025.

In vision systems, feedback loops and thresholding have been used to reject weak visual claims, improving alignment between detected objects and descriptions.

That said, improvements are incremental. The goal is not perfection, but making hallucination manageable, transparent, and detectable.

What the future might look like

I imagine a few possible trajectories for hallucination in AI:

- Hybrid architectures become mainstream

Pure generative models may give way (or be supplemented) with systems that reason over structured knowledge bases, symbolic logic, or external vetted sources. Hallucination will shift from being “model error” to being a choice (the system decides when to guess vs refuse). - Standardized hallucination scoring and regulation

Just like accuracy, speed, or bias, AI services might be required to publish hallucination error rates. Third-party audits could validate those numbers, particularly in regulated domains (health, law, finance). - Improved human + AI workflows

Most real systems will continue to have a human in the loop, with AI outputs flagged for review, not deployed blindly. Interfaces may highlight uncertain segments or require human verification. - Adaptive trust and dynamic fallback

AI systems may dynamically adjust whether to answer, refer to external sources, or decline when uncertainty is high — rather than always attempting an answer. - Adversarial defense & robustness

As embedding manipulation attacks (like the DeepSeek study) show, models will need hardened defenses against crafted prompts that induce hallucination. Robustness will be part of deployment best practices.

So by 2030 or earlier, we might see AI tools that are “usually reliable,” with known and controlled hallucination rates, rather than perfect oracles.

What this means for you — and how to stay safe

As someone using AI tools (for work, writing, research, or curiosity), here are practical takeaways:

- Always treat AI output as provisional. Verify facts, especially when decisions depend on them.

- Be skeptical of citations or references given by models — check whether they are real and accurate.

- Use tools or settings that flag uncertainty or low confidence.

- For critical tasks (e.g. legal, medical), ensure there is human oversight or a verification layer.

- Prefer AI systems or features built with hybrid or retrieval-based architectures over pure generative models when precision is essential.

- Stay aware of domain risk: hallucination rates are higher in specialized, less-represented fields.

- If you detect a hallucination, consider reporting it — feedback helps research improve models.

Hallucinations in AI might sound like sci-fi, but in 2025 they are very real. The landscape is evolving fast. Models are improving, detection is getting smarter, and architectures are becoming more robust. But we are not yet at the point where we can blindly trust generative AI. The trick is not suppression, but management — transparency, fallback strategies, and human oversight.