Let me take you back. In the early web, we built sites by writing HTML/CSS files and serving them from a server or even a shared host. Interactivity came later via CGI, server-side scripts, and then monolithic CMS-driven sites. Over time we traded simplicity for dynamic features, but at a cost: performance bottlenecks, scaling pain, security exposure, and coupling between presentation and business logic.

Around 2015, Mathias Biilmann (co-founder of Netlify) coined “JAMstack” (JavaScript, APIs, Markup) as a rallying concept for a different approach: decouple the front end, pre-render what you can, and leverage APIs or serverless functions for runtime behavior. The idea wasn’t brand new, but it crystallized a philosophy: do as much work ahead of time (build time), serve via global CDNs, and reduce runtime coupling.

Over the past decade, the JAMstack ecosystem matured: headless CMSs, serverless functions, edge runtimes, and hybrid frameworks all blurred the lines between “pure static” and “fully dynamic.” By 2025, we don’t just talk about static sites anymore: we talk about composable, edge-aware, API-first architectures, with JAMstack thinking embedded in many modern frameworks.

In this guide, I’ll walk you through:

- The core principles redefined for 2025

- How the landscape has shifted

- Where JAMstack still thrives

- Where it struggles

- A decision framework for when (and when not) to adopt it

Let’s start by revisiting the core principles.

The core principles (revisited for 2025)

JAMstack has often been described via two pillars: pre-rendering and decoupling. In 2025, these remain relevant—but their implementations have evolved.

Pre-rendering

Pre-rendering means generating HTML (or equivalent output) ahead of user requests, so that your site is not dynamically constructed per-request. This minimizes server load and latency. Early static site generators (SSGs) like Jekyll, Hugo, Gatsby showed the power of this idea.

Today, with large sites and frequent content changes, “full rebuilds” are less tenable. So modern approaches use incremental builds, on-demand builders, static + partial SSR, or edge-rendering fallback strategies. Some frameworks will statically generate most pages, then fallback to dynamic regeneration at the edge when needed. This hybrid model retains many of the benefits while reducing rebuild burden.

Decoupling / composability

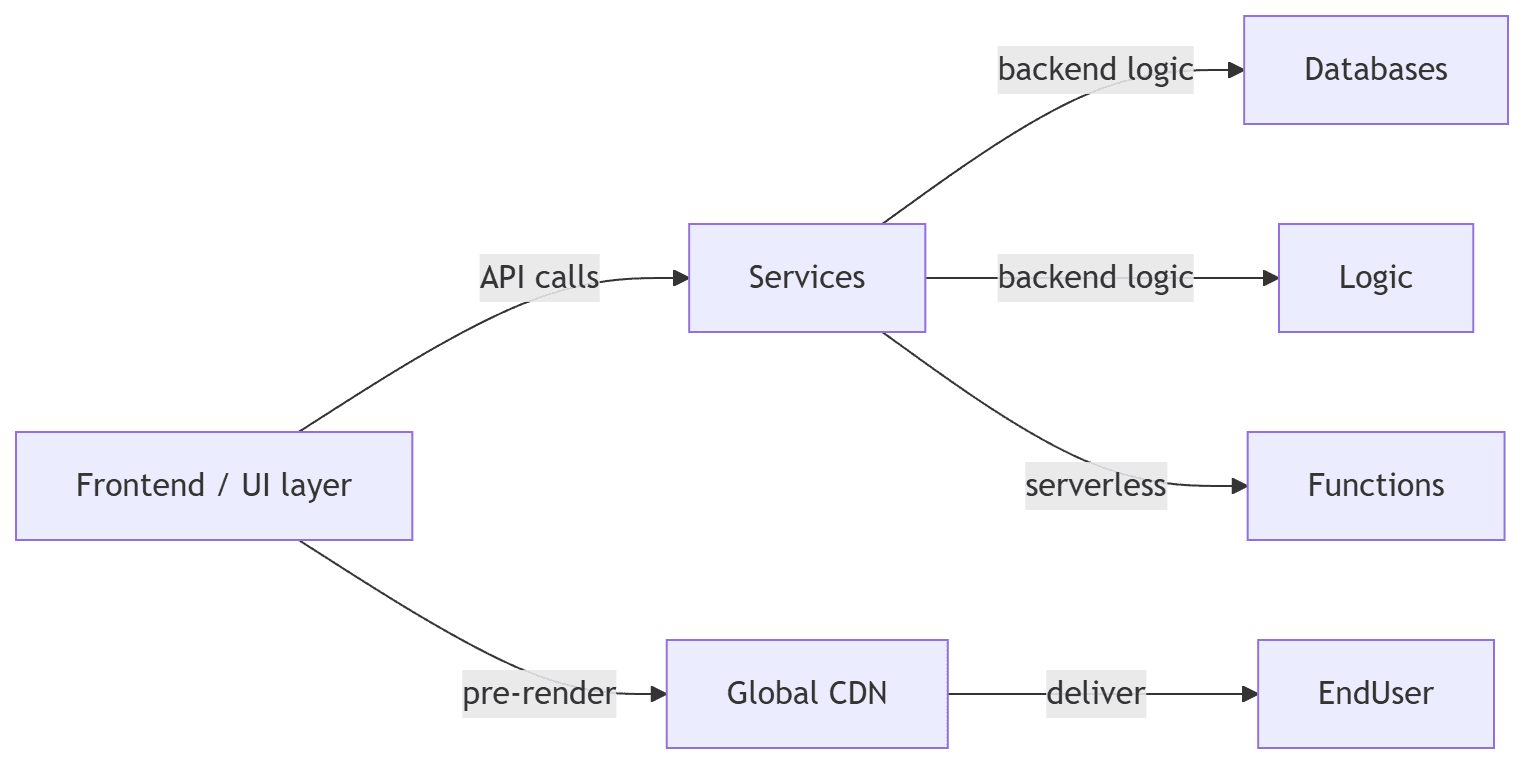

Decoupling means separating the front-end (UI) layer from backend logic, data, and services. The frontend fetches data via APIs or serverless APIs. JAMstack adopted this explicitly: the frontend is “agnostic,” driven by markup + JavaScript + APIs.

In 2025, this principle surfaces as composable architectures: you pick best-of-breed services (e.g. headless CMS, authentication, search, payment) and stitch them rather than building a monolith. The front-end becomes a “presentation shell” that aggregates APIs at runtime or during build.

Thus, JAMstack is less a rigid set of rules and more a philosophy: push static as far as possible, isolate runtime behavior to API boundaries, and build the site from composable building blocks.

Mermaid sketch of the architecture concept in 2025:

graph LR UI[Frontend / UI layer] -->|API calls| Services UI -->|pre-render| CDN[Global CDN] Services -->|backend logic| Databases & Logic Services -->|serverless| Functions CDN -->|deliver| EndUser

You can see the frontend is statically delivered, but for runtime behavior the UI talks to backend services or serverless functions.

What’s changed in recent years

Because the web keeps moving, JAMstack in 2025 is not quite what it was in 2017. Let me highlight key shifts that influence when (and whether) JAMstack makes sense today.

Emergence of hybrid / edge rendering

Frameworks like Next.js (App Router), Nuxt, and others now support hybrid rendering—mix static, SSR, and on-demand or edge rendering per route. This blurs the boundaries: you don’t need to choose “pure static” vs “pure SSR” globally; you can tailor per page.

Edge runtimes (Cloudflare Workers, Vercel Edge Functions, Netlify Island Functions) allow serverless logic to execute closer to the user, reducing latency. Thus dynamic operations can approximate static-like speed.

Tooling, build speed & incremental approaches

Local development is dramatically faster (e.g. Vite, esbuild) compared to old bundlers. Also, build systems increasingly support incremental and on-demand builds: only rebuild changed pages or generate new pages in response to demand, not the entire site. This reduces deployment friction for large content sites.

Ecosystem maturity

The headless CMS space is robust (e.g. Contentful, Strapi, Sanity), offering versioning, preview, collaboration, and APIs. Third-party APIs for payments, identity, search, messaging, etc., are readily available and battle-tested. That lowers the barrier to adopting an API-first architecture.

Pitfalls exposed in scale

As more teams push JAMstack into complex use cases, you begin to see when the model strains: overly long build times, preview workflows that lag, API rate limits or latency, coupling through APIs, complex deployment or locking into platforms. Some developers question whether JAMstack is “still worth it” in all cases.

Hence, we must be more circumspect: JAMstack is powerful, but it isn’t a silver bullet.

When JAMstack shines (use cases in 2025)

Let’s talk about the sweet spots; where advantages strongly outweigh trade-offs.

1. Marketing sites, landing pages, microsites

These tend to have high performance requirements, moderate content updates, and simpler dynamic needs (forms, analytics). JAMstack enables near-instant global delivery, strong SEO, and easy scaling.

2. Documentation, knowledge bases, blogs, API portals

These are content-heavy, relatively low-change-rate, and benefit from versioning, search, and stable infrastructure. Many major docs sites adopt JAMstack for these reasons.

3. E-commerce with static product catalog + dynamic cart

You can statically generate product pages while offloading cart, checkout, user accounts, inventory, and personalization into APIs or serverless layers. Many hybrid JAMstack e-commerce setups succeed this way.

4. Marketing-driven SaaS landing + dashboards

Often the public-facing marketing site is static, while the dashboard is built separately. The front-end portion benefits from JAMstack, and the app itself sits in a more traditional backend.

5. MVPs and prototypes

When you want a fast time-to-market, starting on a JAMstack architecture helps you experiment, scale, and evolve without building full infrastructure from day one.

6. Sites with high burst traffic

Because JAMstack serves from CDNs and static assets, it naturally handles spikes (product launches, campaigns, viral content) without auto-scaling backend complexity.

In these domains, you gain:

- Fast performance & low latency

- Robust security (fewer runtime surfaces)

- Easy scalability

- Lower operational burden

- Clean separation of concerns

When JAMstack struggles (anti-patterns in 2025)

JAMstack is not the right tool for every scenario. Knowing the boundaries is critical.

1. Highly dynamic, real-time applications

Chat apps, multiplayer games, collaborative editors, streaming, auctions—all require low-latency, server-managed state and often persistent connections (e.g. WebSockets). JAMstack is ill-suited for those.

2. Complex authentication / RBAC / business logic

If you have deeply nested role-based logic or server-driven workflows per request, you may hit friction in pushing that entirely into APIs or serverless functions. Latency, rate limits, cold start, and orchestration become concerns.

3. Very large content sites with frequent edits / preview requirements

If your site has thousands of pages with constant updates and strong preview/editor workflows, full rebuilds or even incremental builds may lag. The preview (what editors see before publishing) might not synchronize near real time. Build failures or queue backlog become frustrating.

4. Heavily data-driven dashboards (analytics, BI)

If every page must query database aggregates, perform joins, or depend on real-time data, pushing that logic behind APIs may lead to performance or complexity trade-offs compared to more traditional SSR or server-driven rendering.

5. When latency / API reliability becomes a burden

When your site’s performance depends on external APIs, you become vulnerable to API rate limits, outages or cold-start latencies. For example, if your site fetches search results or pricing dynamically on many pages, latency may degrade UX.

6. When your team lacks experience or infrastructure

If your team is unfamiliar with API-first architecture, and you cannot invest in tooling for deployment, preview, developer workflows, or monitoring, the added complexity might outweigh the benefits for your scale or budget.

In such cases, SSR, server-rendered frameworks, or a traditional monolith may remain more efficient.

Decision framework: How to choose

Here is a structured way to decide whether a JAMstack-based (or hybrid) architecture fits your project.

| Decision Question | If “Yes” | If “No” |

|---|---|---|

| Is performance / SEO / CDN delivery critical? | Favor JAMstack | Don’t prioritize it |

| Will most of your content pages be relatively static or semi-dynamic? | Favor JAMstack | Consider SSR/SSR hybrid |

| Does your dynamic logic map naturally to APIs / serverless functions? | Favor JAMstack | Reconsider complexity |

| Do you expect frequent content changes with real-time preview needs? | Evaluate incremental build & preview systems | SSR or backend-based CMS |

| Is your app architecture mostly stateful, real-time, or highly interactive? | Be cautious | Likely SSR / SPA / server-rendered |

| Does your team have the skills and infrastructure (CI/CD, API integrations, monitoring)? | Favor JAMstack | Invest in infrastructure first |

You may start with a hybrid approach: statically render what you can, fallback to serverless or edge functions when needed. Over time you can migrate more pieces into the static/composed domain as system maturity improves.

Sample architecture & code sketch

Here’s a minimal example: a blog with product pages and a dynamic cart.

- At build time, fetch content (markdown or CMS) and product catalog from API, to generate static pages.

- On the client, implement a cart widget that calls serverless APIs (e.g.,

/api/cart) or third-party cart service. - For pages that need real-time data (inventory, personalization), fetch data client-side or via edge functions.

Example (Next.js-style route with API and static generation):

// pages/product/[slug].js

export async function getStaticPaths() {

const products = await fetchProducts(); // from catalog API

return {

paths: products.map((p) => ({ params: { slug: p.slug } })),

fallback: 'blocking',

}

}

export async function getStaticProps({ params }) {

const product = await fetchProduct(params.slug)

return {

props: { product },

revalidate: 60, // regenerate after 60s

}

}

export default function ProductPage({ product }) {

// render product detail

return <div>{product.name}</div>

}// pages/api/cart.js

export default async function handler(req, res) {

if (req.method === 'POST') {

// Validate, process cart addition, talk to backend cart service

return res.status(200).json({ success: true })

}

return res.status(405).end()

}In this setup, the product pages are statically generated, periodically revalidated, and dynamic behavior (cart) is offloaded to API endpoints. You gain the static footprint where possible without sacrificing dynamic features.

Best practices and caveats in 2025

- Use incremental / on-demand / ISR (incremental static regeneration) to avoid full rebuilds for large sites.

- Incorporate preview workflows to allow content editors to see drafts before publishing.

- Cache API responses aggressively at the edge to reduce runtime latency.

- Monitor cold-start, rate limits, and error rates in serverless APIs.

- Design fallback UI (loading / skeleton) for pages waiting on client-side fetches.

- Degrade gracefully: if API fails, the static fallback still shows basic content.

- Stay mindful of platform lock-in (Netlify, Vercel, Cloudflare), and design abstraction layers if you may migrate.

- Start simple. Treat JAMstack as a spectrum rather than all-or-nothing.

Summary and takeaway

JAMstack is a refined architectural philosophy embedded across the modern web. Its strengths (performance, security, scalability, composability) remain compelling in many use cases. Yet to wield it wisely, you must judge your project’s dynamic needs, content volume, real-time expectations, and team capability.

If your use case involves content rendering, landing pages, documentation, or an e-commerce catalog with manageable dynamic parts, JAMstack (or a hybrid variant) is often an excellent choice. Conversely, if your app is highly interactive, heavily data-driven in real time, or demands complex business logic per request, a more traditional or SSR-based architecture may be safer.

In short: use JAMstack when its paradigm aligns with your requirements—but don’t force it where the match is poor.