Agile teams have leaned on user stories for two decades because they keep everyone aligned on why a feature exists—not just how it’s implemented. The classic pattern, popularized in the early 2000s—“As a [role], I want [goal] so that [benefit]”—emerged alongside practices like “card, conversation, confirmation.” It let product and engineering speak the same language without drowning in specification boilerplate.

Yet most codebases—especially legacy or open-source—don’t come with a tidy scrapbook of user stories. Specs vanish; code survives. That’s why “reading intent from code” has become a recurring research thread. Prior work got close by converting text (requirements docs, issues, interviews) into stories, but it still assumed the text existed. Recent examples include Rahman & Zhu’s GeneUS, which uses GPT-4 to transform requirements documents into structured user stories. Useful—until you open a repo with only code.

A new preprint tackles the missing piece: Can large language models (LLMs) recover user stories directly from source code? Using 1,750 C++ snippets with human-authored, reconciled ground truth, the authors test multiple models and prompting styles. The eye-catcher: for code up to ~200 non-comment lines (NLOC), several models average around 0.8 F1 against reference stories—strong “adequate paraphrase” territory. And a well-prompted 8B model can match a 70B model, which is the kind of practical result that changes roadmaps.

The backstory: from code summaries to intent

We’ve known for years that LLMs summarize code pretty well; summarization matured from rules, to neural, to today’s foundation models. But a summary explains what a function does. A user story explains why it matters for a person. That difference makes stories accessible to non-developers and useful for onboarding, retrospectives, and roadmap hygiene. Meanwhile, the datasets to study this at scale have arrived. IBM’s CodeNet includes millions of solutions (C++ is heavily represented), and industry trendlines confirm C++’s persistent relevance in performance-critical software. These give researchers a realistic playground for mapping code → intent.

What the new study actually did

The authors sampled 1,750 C++ snippets across 35 size bands (1–350 NLOC), wrote human reference stories, and evaluated five LLMs using multiple prompts:

- Model families: Llama 3.1 (8B, 70B, 405B), DeepSeek-R1 (reasoning-optimized), and OpenAI GPT-4o-mini (base + light fine-tuning).

- Prompt styles: zero-shot, one-shot, few-shot; with/without Structured Chain-of-Thought (SCoT)—a reasoning schema that mirrors program control flow (sequence/branch/loop).

- Metric: BERTScore (F1), selected after a head-to-head against BLEU/ROUGE for better sensitivity to meaning, not surface words.

Two practical wrinkles show up in the data:

- Performance dips as inputs get longer—classic “lost-in-the-middle” behavior in long contexts.

- Reasoning scaffolds like SCoT help larger models more than smaller ones; exemplar prompting (a single good example) helps smaller models a lot.

The headline results (and what to do with them)

First, it works: across models, user-story generation from code is meaning-faithful for typical function/class sizes. The study reports average F1 ≈ 0.8 for ≤200 NLOC. Second, smart prompting > sheer size: a single illustrative example often lifts an 8B model to 70B territory, while SCoT brings marginal gains primarily for bigger models. Third, cost dynamics matter: medium-sized models can be cost-effective, and fine-tuned small models shrink output length and spend. (Context: vendors publish different price points; GPT-4o-mini launched with aggressive pricing; DeepSeek and Llama pricing varies by host.)

Why this is actionable for you

If you maintain a large or aging codebase, this technique can backfill intent where docs lapsed. If you run an open-source project, generated user stories can lower contributor friction. If you’re building an internal platform, a small model with a good one-shot prompt might be all you need—cheap to run on a single GPU or via a managed host like DigitalOcean.

What “good” prompting looked like

The authors’ biggest practical win: one-shot prompting. One well-chosen example shows the model the target form and level of abstraction, without drowning it in demonstrations that bloat context (and cost).

Here’s a minimal, battle-tested pattern you can adapt:

You are generating user stories from code.

Output one story in this format: "As a <role>, I want <goal> so that <benefit>."

Stay high-level (user intent), not implementation details.

Example:

[CODE]

int shortestPath(...) { /* ... Dijkstra ... */ }

[/CODE]

[STORY]

As a logistics planner, I want to compute the most efficient route so that I can reduce delivery time and costs.

[/STORY]

Now do this:

[CODE]

<PASTE SNIPPET HERE>

[/CODE]

[STORY]Two tips borne out by the paper and broader literature: front-load instructions so they aren’t “forgotten” mid-context; and keep the exemplar tight to avoid the long-context drop-off.

Where SCoT helps (and where it doesn’t)

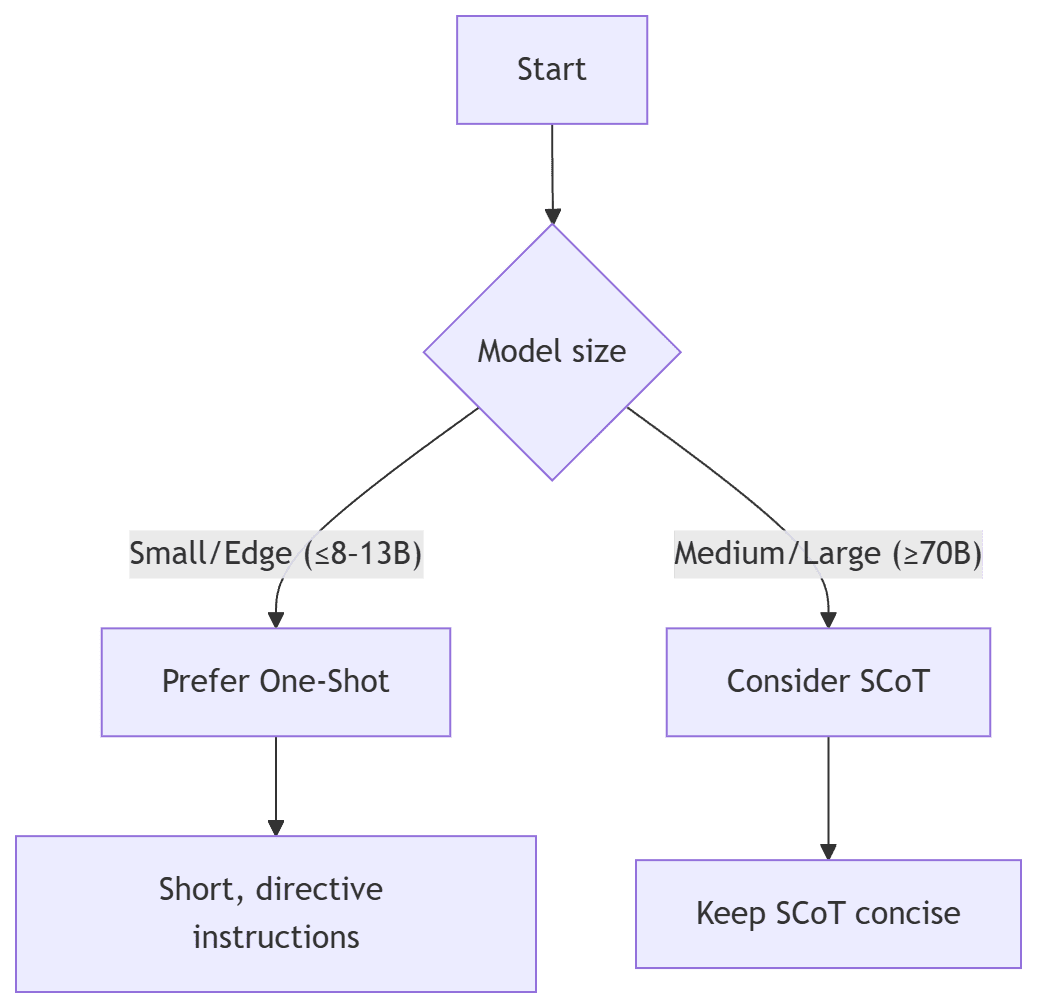

Structured Chain-of-Thought decomposes reasoning into control-flow-like steps (sequence, branch, loop). That mirrors code and can focus larger models on the right abstractions. In the study, SCoT provided modest gains for 70B/405B but brought little or even negative change for smaller models.

A quick mental model:

graph TD

S[Start] --> M{Model size}

M -- Small/Edge (≤8–13B) --> E1[Prefer One-Shot]

E1 --> O1[Short, directive instructions]

M -- Medium/Large (≥70B) --> E2[Consider SCoT]

E2 --> O2[Keep SCoT concise]

In plain terms: if you’re on a small model, spend your tokens on one strong example; if you’re on a bigger model, try SCoT when stories drift into “how” instead of “why.”

Evaluation that respects meaning, not surface words

BLEU and ROUGE reward n-gram overlap, not semantics. For paraphrase-heavy tasks like story generation, BERTScore (especially with bert-base-uncased) tends to separate “same meaning, new words” from “different meaning.” That’s why the authors favored it as their primary metric. If you replicate this at work, do the same—and spot check with humans.

Limits to keep in mind

The dataset is competitive-programming C++—self-contained, not sprawling microservice mazes. That’s a reasonable place to start; it just means you should expect to combine hierarchical extraction (function → class → module) and chunking for large systems. Industry data suggests C++ still matters (especially performance-bound domains), so results here aren’t a curiosity—they’re relevant.

Try this on your codebase in an afternoon

- Pick a representative function/class (≤200 NLOC).

- Run the one-shot prompt above using your preferred model host (cloud or local).

- Skim the output for role/goal/benefit and adjust the exemplar until it nails your voice.

- Add a thin post-processor that enforces the template and rejects implementation details (e.g., algorithm names) unless they’re truly user-visible.

When you’re ready to scale, wire the pipeline into CI to regenerate stories on diff—then surface them in your docs or issue tracker. For hosting and cost controls, vendors vary widely (OpenAI’s mini models, Llama variants via third-party hosts, reasoning-centric models like DeepSeek-R1); pick what fits your latency and budget envelope.

The bottom line

You can recover the “why” from code at useful fidelity today. The path of least resistance isn’t a giant model; it’s a right-sized one with a clear one-shot prompt and—when warranted—light structured reasoning. Start small, measure with a semantic metric, and ship the stories where people actually work: onboarding docs, PR templates, and sprint reviews. Then, as the paper suggests, use these stories to sanity-check AI coding assistants: if the generated code’s implied story drifts from your intent, that’s a red flag worth catching early.