For years, researchers have hinted that the invisible waves zipping between your phone and router carry more than just data. They also carry subtle clues about what is happening in a room. Now a team in Japan says it can turn those clues into actual pictures that look like what a camera would see, using only WiFi signals. The system, called LatentCSI, comes from the Institute of Science Tokyo and it uses the same kind of AI behind popular image generators to draw scenes from the ripples in the air.

If that sounds eerie, it is also practical. Cameras are powerful but they are obvious and can be intrusive. WiFi is already in your home and it works in the dark and through thin obstacles. For the last decade scientists have used WiFi’s channel state information, the way signals bounce around people and furniture, to detect motion, recognize activities and even monitor breathing. Most of those systems stopped at graphs and labels, not pictures, because turning raw signal patterns into full images proved too hard and too slow. Surveys of the field have repeatedly flagged that gap.

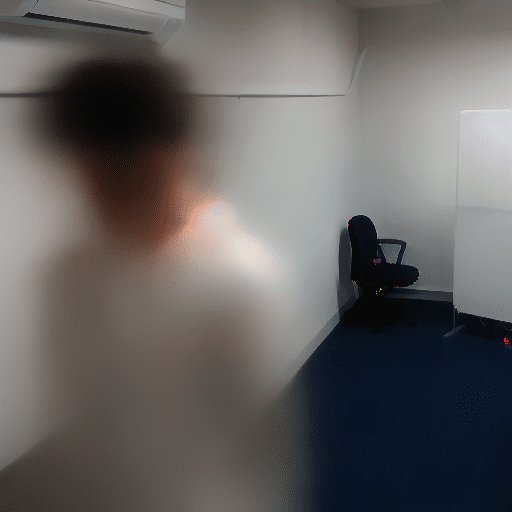

LatentCSI claims a neat workaround. The researchers do not try to paint every pixel from WiFi. Instead they train a small neural network that converts WiFi measurements into the compressed “latent” representation used by a powerful, already trained image model, the same family of diffusion models that made Stable Diffusion famous. The heavy lifting is handled by that pretrained model, which expands the compressed representation back into a high resolution picture. In tests on a custom indoor walk dataset and on the MM-Fi human sensing benchmark, the method produced images that independent quality metrics rate as more realistic than earlier WiFi approaches, and the team reports it trained far faster than systems that predict pixels directly.

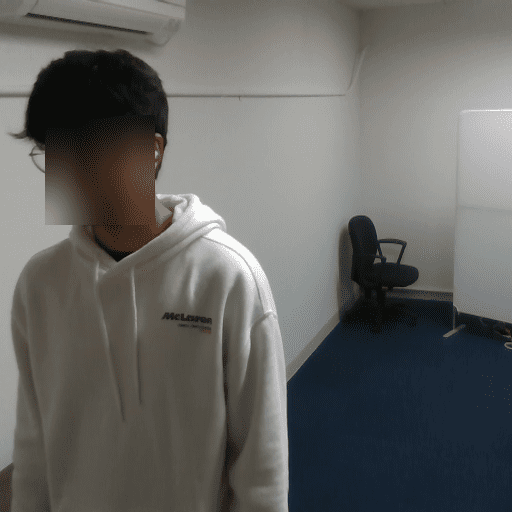

Just to clarify: the images aren’t direct reconstructions from WiFi signals. Instead, the WiFi data is used as an extra conditioning input for a standard diffusion model. In practice, that means the WiFi measurements guide the placement of objects in the scene, while the diffusion model fills in the missing details with visually plausible—though not necessarily accurate—content.

Why people might care depends on where they sit. In elder care, a home could detect a fall at night without indoor cameras. In firefighting or search and rescue, responders could get a sketch of a smoke filled room where cameras fail. In industrial sites, operators could monitor restricted spaces without installing lenses. Prior research already showed WiFi can reconstruct silhouettes or poses through walls and in low light. Turning that into images, especially with a tool that runs faster and on cheaper hardware, could push the tech from lab demo to deployment.

There is a catch that cuts both ways. Because the system uses a compressed representation, it often loses fine details such as faces (see above). The researchers present that as a privacy feature, since it reduces the risk of recreating someone’s exact appearance. At the same time the image model can be guided by text prompts, which means the same WiFi snapshot can be rendered as a realistic photo or stylized as a drawing. The flexibility is appealing for product teams and unsettling for critics who worry about how generative AI can shape what people think they see. These trade offs are not abstract, regulators and security researchers have warned that diffusion models can be attacked or misused, which raises fresh questions when they are paired with always on household infrastructure.

To understand how much of a shift this could be, it helps to look back. The first wave of WiFi to image work leaned on GANs, a now classic approach to synthetic imagery that is notoriously finicky to train. A well known 2020 project called CSI2Image showed the idea was possible at small image sizes, but it struggled with motion and scale. The new study borrows the playbook from modern image generators, which moved from pixel space into a leaner latent space, then rode that efficiency to better quality and speed. That is the same pivot that let diffusion models set records for image realism while slashing compute costs.

The stakes are clear. If routers learn to see, even in a limited way, offices and apartment blocks could gain a form of ambient awareness that helps with safety, energy use and accessibility. The same capability could enable a new class of covert surveillance that does not need visible cameras or bright signage. The researchers trained their system with cameras during setup then switched them off, which mirrors how a building owner might justify using it, calibrate once, monitor quietly forever. That pattern will force hard choices for lawmakers who have spent years catching up to facial recognition and smart doorbells. They will now have to decide whether the right to be off camera applies when there is no camera at all.

The road from paper to product is not short. Results today come from controlled indoor tests with matched WiFi and video, and the hardware and layouts in the wild are messy. Making the system robust to different rooms, devices and multiple people is an open job. Still, the direction of travel is unmistakable. For a field that has promised camera free sensing for a decade, LatentCSI puts a familiar, and possibly controversial, picture within reach.

If you want the short version, your router may never be a camera, but thanks to advances in AI it could become something stranger, a way to sketch a room from the way signals move through it. The benefits are real for safety and convenience. So are the questions about consent and control.